Table of contents

Code | Arxiv | Oral | GScholar | Rui Chen, Hao Su

Notes

(2023-12-24)

Abs & Intro

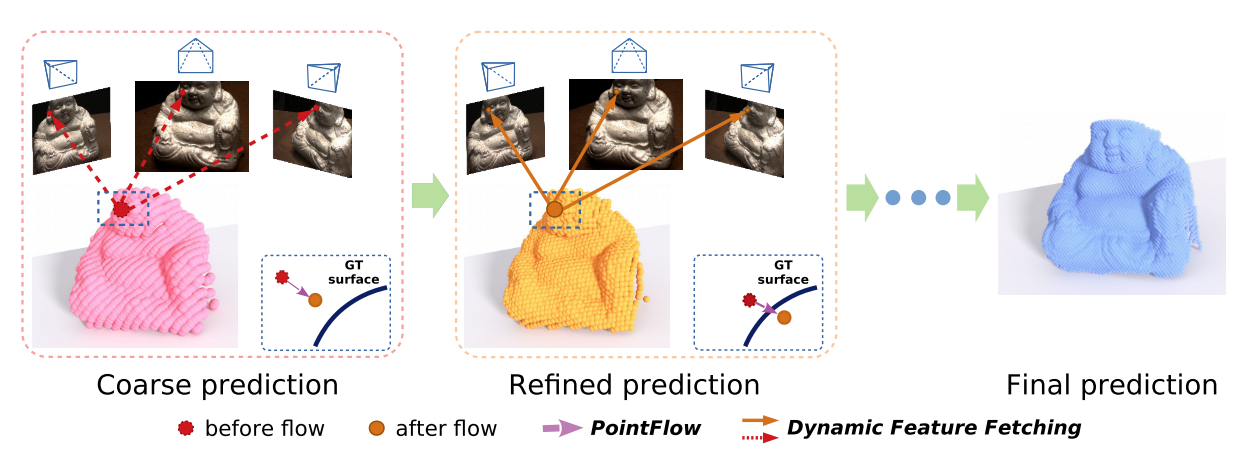

Use point cloud to refine depth map rather than regularizing (“squashing”) cost volumes.

- The points move along the ray corresponding to each pixel.

- The step size is determined by neighbors.

Refine the coarse point cloud by iteratively estimating the residual from the current depth to the target depth.

- The target depth is obtained from the ground-truth piont cloud.

Output: a dense point cloud, as opposed to sparse point cloud from SfM (COLMAP) used by 3DGS.

Depth map yielded from point cloud representation doesn’t suffer from the problem of resolution limitation.

Optimization objective: minimize the distance from point to the surface, with the supervision of depth map

- Depth estimation doesn’t involve opacity for rendering, so can the RGB appearance be used as loss function?

PointFlow: Move point towards the surface. In contrast, 3DGS split or clone a Gaussian along the position’s gradient with some hyper-parameters setting.

How is the generalizability of this method?

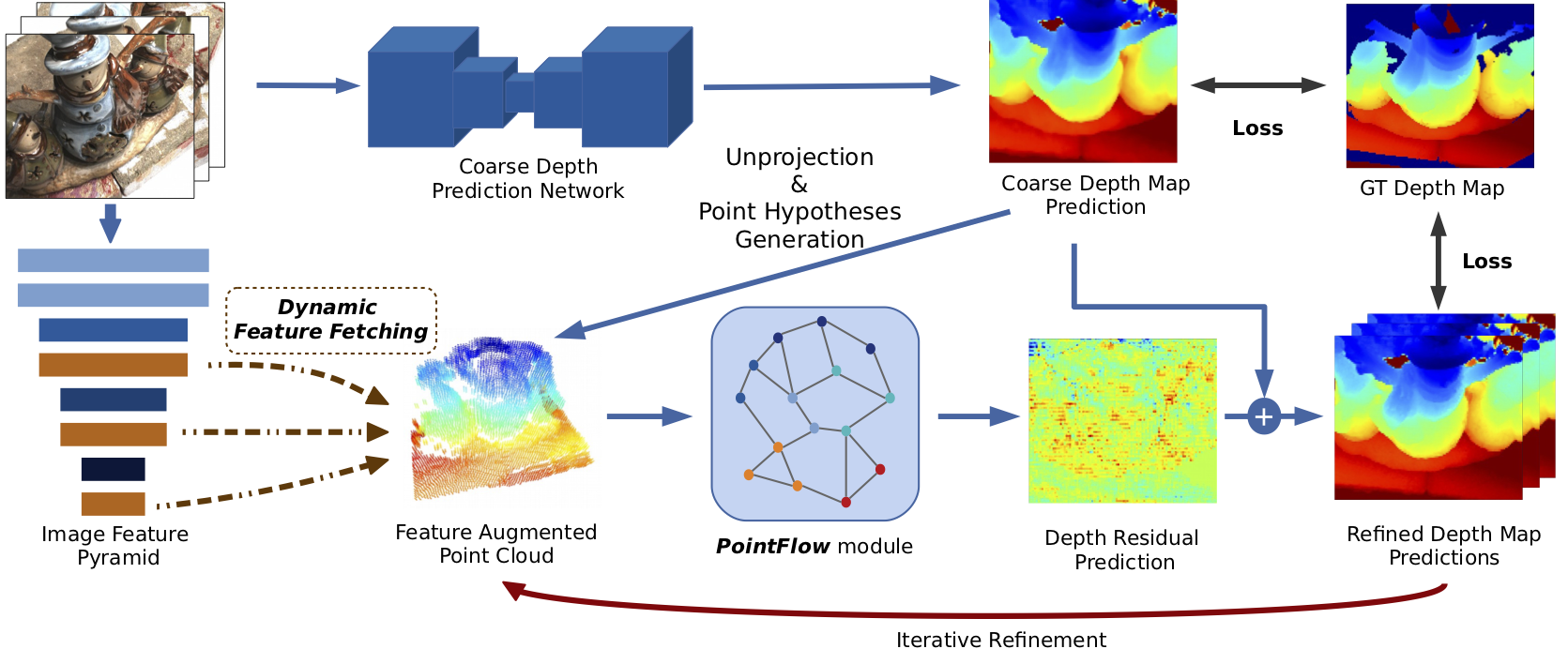

Pipeline

-

Point cloud initialization from a small coarse depth map for reference image.

- 48 homographies (depths) for warping a source feature map with the 1/8 size of the original image.

-

Assign 2D and 3D context feature to each point

-

2D feature: projecting each point onto 3-level feature maps of each view with camera focals and cx,cy scaled.

The retrieved feature vectors of N views are merged into a variance for a certain level j of the feature maps:

$$𝐂ʲ = \frac{\sum (𝐅ʲ - \bar{𝐅ʲ}) }{N}$$- Only the feature vectors at the projection locations are taken, rather than processing the entire feature map, thereby improving efficiency.

-

3D feature: normalized point coordinates $𝐗ₚ$

-

Concatenate features: $Cₚ = 𝐂¹ ⊕ 𝐂² ⊕ 𝐂³ ⊕ 𝐗ₚ$

-

-

Define displacement steps with point hypotheses.

The unprojected 3D point cloud from 2D depth map is determined. The displacement direction has a lot of freedom. Thus, each point is confined to move along the ray emitted from the reference camera. Such that the per-pixel depth can be refined.

-

In the above figure, • is a real point, and o is a point hypothesis, denoted as $\tilde{𝐩ₖ}$, which is associated with a read point $𝐩ₖ$:

$$\tilde{𝐩ₖ} = 𝐩ₖ + k s 𝐭, \quad k = -m, …, m$$where k is the number of steps, s is the step size along the ray direction 𝐭.

m = 1, so

-

-

Aggregate features of the n nearest neighbors

Use Dynamic Graph CNN to aggregate neighboring points

-

Use an MLP to map the aggregated feature to probabilities of point hypotheses.

The PointFlow module requires iterations to approach the surface iteratively.

The predicted depth residual against the target depth is a probabilistic weighted sum (expectation) of all predefined hypothetical steps.

$$Δdₚ = 𝐄(ks) = ∑_{k=-m}^m ks × Prob( \tilde{𝐩ₖ} )$$ -

Upsampling the refined depth map and shrink the interval between point hypotheses.

-

Loss: Accumulated absolute error between refined depth map and the ground-truth depth map over all previous iterations.

$$L = ∑_{i=0}^l \Big( \frac{1}{s^{(i)}} ∑_{p∈𝐏_{valid}} \\| 𝐃_{GT}(p) - 𝐃^{(i)}(p) \\|₁ \Big)$$

Method

Feature concatenation is similar to PixelNeRF: point ocoordinates + feature vectors. But here the points position isn’t consistent, resulting in that different feature vectors that are sampled on the feature pyrimid.

Because the 2D CNN also needs to be trained, the feature pyrimid is changing as well.

Play

Environment

(2023-12-26)

Installation on Lambda server (Ubuntu 18.04.6 LTS, nvcc -V return 10.2, Driver Version: 470.103.01):

|

|

Error: No CUDA runtime is found, using CUDA_HOME='/usr/local/cuda'

-

Traceback

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26No CUDA runtime is found, using CUDA_HOME='/usr/local/cuda' running build_ext Traceback (most recent call last): File "setup.py", line 20, in <module> 'build_ext': BuildExtension File "/home/zichen/.local/lib/python3.6/site-packages/setuptools/__init__.py", line 153, in setup return distutils.core.setup(**attrs) File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/distutils/core.py", line 148, in setup dist.run_commands() File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/distutils/dist.py", line 955, in run_commands self.run_command(cmd) File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/distutils/dist.py", line 974, in run_command cmd_obj.run() File "/home/zichen/.local/lib/python3.6/site-packages/setuptools/command/build_ext.py", line 79, in run _build_ext.run(self) File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/distutils/command/build_ext.py", line 339, in run self.build_extensions() File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/site-packages/torch/utils/cpp_extension.py", line 404, in build_extensions self._check_cuda_version() File "/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.6/site-packages/torch/utils/cpp_extension.py", line 777, in _check_cuda_version torch_cuda_version = packaging.version.parse(torch.version.cuda) File "/home/zichen/.local/lib/python3.6/site-packages/pkg_resources/_vendor/packaging/version.py", line 49, in parse return Version(version) File "/home/zichen/.local/lib/python3.6/site-packages/pkg_resources/_vendor/packaging/version.py", line 264, in __init__ match = self._regex.search(version) TypeError: expected string or bytes-like object

-

Search the error with DDG.

-

Reinstall with newer packages:

“install_dependencies.sh”:

1 2 3 4 5 6#!/usr/bin/env bash conda create -n PointMVSNet python=3.10 source activate PointMVSNet conda install pytorch==1.12.1 torchvision==0.13.1 cudatoolkit=10.2 -c pytorch conda install -c anaconda pillow pip install -r requirements.txtError:

identifier "AT_CHECK" is undefined.Full message

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34building 'dgcnn_ext' extension creating build creating build/temp.linux-x86_64-cpython-310 creating build/temp.linux-x86_64-cpython-310/csrc /usr/local/cuda/bin/nvcc -I/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include -I/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include/torch/csrc/api/include -I/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include/TH -I/home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/zichen/anaconda3/envs/PointMVSNet/include/python3.10 -c csrc/gather_knn_kernel.cu -o build/temp.linux-x86_64-cpython-310/csrc/gather_knn_kernel.o -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr --compiler-options '-fPIC' -O2 -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -DTORCH_EXTENSION_NAME=dgcnn_ext -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_61,code=compute_61 -gencode=arch=compute_61,code=sm_61 -std=c++14 /home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include/c10/core/SymInt.h(84): warning: integer conversion resulted in a change of sign /home/zichen/anaconda3/envs/PointMVSNet/lib/python3.10/site-packages/torch/include/ATen/Context.h(25): warning: attribute "__visibility__" does not apply here csrc/gather_knn_kernel.cu(34): error: identifier "AT_CHECK" is undefined csrc/gather_knn_kernel.cu(106): error: identifier "AT_CHECK" is undefined csrc/gather_knn_kernel.cu(125): error: identifier "THArgCheck" is undefined csrc/gather_knn_kernel.cu(145): error: identifier "THCudaCheck" is undefined csrc/gather_knn_kernel.cu(84): error: identifier "TH_INDEX_BASE" is undefined 5 errors detected in the compilation of "/tmp/tmpxft_00002cb5_00000000-6_gather_knn_kernel.cpp1.ii". error: command '/usr/local/cuda/bin/nvcc' failed with exit code 1Perplexity (GPT4): Identifiers have been deprecated after PyTorch 1.0.

In PyTorch 1.5.0 and later, AT_CHECK has been replaced with TORCH_CHECK. Similarly, THArgCheck and THCudaCheck are no longer used in newer versions of PyTorch. The identifier TH_INDEX_BASE is also undefined because it’s no longer used in PyTorch 1.0 and later.

-

Downgrade pytorch:

AI葵 used torch 1.4. Issue: “TH_INDEX_BASE” is undefined #1

1 2 3 4 5 6#!/usr/bin/env bash conda create -n PointMVSNet python=3.8 source activate PointMVSNet conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=10.1 -c pytorch conda install -c anaconda pillow pip install -r requirements.txttorchvision older than v0.6.1 doesn’t exist in conda:

Full message

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23Solving environment: failed with initial frozen solve. Retrying with flexible solve. PackagesNotFoundError: The following packages are not available from current channels: - torchvision==0.5.0 Current channels: - https://conda.anaconda.org/pytorch/linux-64 - https://conda.anaconda.org/pytorch/noarch - https://conda.anaconda.org/conda-forge/linux-64 - https://conda.anaconda.org/conda-forge/noarch - https://repo.anaconda.com/pkgs/main/linux-64 - https://repo.anaconda.com/pkgs/main/noarch - https://repo.anaconda.com/pkgs/r/linux-64 - https://repo.anaconda.com/pkgs/r/noarch To search for alternate channels that may provide the conda package you're looking for, navigate to https://anaconda.org and use the search bar at the top of the page. -

Use pip to install torch 1.4. (perplexity)

1 2 3 4 5conda create -n PointMVSNet python=3.8 source activate PointMVSNet conda install -c anaconda pillow pip install torch==1.4.0 torchvision==0.5.0 pip install -r requirements.txtAnd modify

TH_INDEX_BASE->0in “gather_knn_kernel.cu”.It can be compiled successfully.

P.S.:

- 3DGS also has knn code: simple-knn

- Open3D also can find the nearest neighbors.

Train & Eval

-

Place the dataset (DTU) in the specified directory:

1 2mkdir data ln -s /home/zichen/Downloads/mvs_training/dtu/ ./data -

Training for 16 epochs cost 3 days approximately:

1 2export CUDA_VISIBLE_DEVICES=4,5,6,7 python pointmvsnet/train.py --cfg configs/dtu_wde3.yaml -

Evaluate

The “Recified” dataset can’t be download from the official website by clicking the hyperlink. It may be due to broswer limitations as the zip file is 123 GB (analyzed by Perplexity).

Wget works:

1wget http://roboimagedata2.compute.dtu.dk/data/MVS/Rectified.zip-

Download message

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16(base) zichen@lambda-server:/data/zichen$ wget http://roboimagedata2.compute.dtu.dk/data/MVS/Rectified.zip wget: /home/zichen/anaconda3/envs/GNT/lib/libuuid.so.1: no version information available (required by wget) --2023-12-30 19:30:31-- http://roboimagedata2.compute.dtu.dk/data/MVS/Rectified.zip Resolving roboimagedata2.compute.dtu.dk (roboimagedata2.compute.dtu.dk)... 130.225.69.128 Connecting to roboimagedata2.compute.dtu.dk (roboimagedata2.compute.dtu.dk)|130.225.69.128|:80... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: https://roboimagedata2.compute.dtu.dk/data/MVS/Rectified.zip [following] --2023-12-30 19:30:32-- https://roboimagedata2.compute.dtu.dk/data/MVS/Rectified.zip Connecting to roboimagedata2.compute.dtu.dk (roboimagedata2.compute.dtu.dk)|130.225.69.128|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 129593443783 (121G) [application/zip] Saving to: ‘Rectified.zip’ Rectified.zip 100%[===========================>] 120.69G 3.48MB/s in 14h 18m 2023-12-31 09:48:33 (2.40 MB/s) - ‘Rectified.zip’ saved [129593443783/129593443783]

Unzip it

-