Table of contents

- Source Video: Diffusion Models | Paper Explanation | Math Explained - Outlier

- Code: dome272/Diffusion-Models-pytorch

(2023-08-02)

Idea & Theory

Diffusion model is a generative model, so it learns the distribution of data 𝐗. (Discrimitive model learns labels. And MLE is a strategy to determine the distribution through parameters 𝚯)

The essential idea is to systematically and slowly

destroy structure in a data distribution through

an iterative forward diffusion process. We then

learn a reverse diffusion process that restores

structure in data, yielding a highly flexible and

tractable generative model of the data. [1]

Forward diffusion process:

Sample noise from a normal distribution¹ and add it to an image iteratively, until the original distribution of the image has been completely destroyed, becoming the same as the noise distribution.

-

The noise level (mean, variance) of each timestep is scaled by a schedule to avoid the variance explosion along with adding more noise.

-

The image distribution should be destroyed slowly, and the noise redundency at the end stage should be reduced.

-

OpenAI ³ proposed Cosine schedule in favor of the Linear schedule in DDPM².

Reverse diffusion process:

Predict the noise of each step.

-

Do not predict the full image in one-shot because that’s intractable and results in worse results¹.

-

Predicting the mean of the noise distribution and predicting the noise in the image directly are equivalent, just being parameterized differently ².

-

Predict noise directly, so it can be subtracted from image.

-

The variance σ² of the normal distribution followed by the noise can be fixed². But optimizing it together with the mean, the log-likehood will get improved ³.

Architecture

-

DDPM used UNet like model:

- Multi-level downsample + resnet block ➔ Low-res feature maps ➔ Upsample to origianl size

- Concatenate RHS feature maps with the LHS feature maps of the same resolution to supplement the location information for features at each pixel.

- Attend some of LHS and RHS feature maps by attention blocks to fuse features further.

- Time embedding is added to each level of feature maps for “identifying” the consistent amount of noise to be predicted during a forward pass at a timestep.

-

OpenAI 2nd paper(4) made improvement by modifying the model in:

- Increase levels, reduce width;

- More attention blocks, more heads;

- BigGAN residual block when downsampling and upsampling;

- Adaptive Group Normalization after each resnet block: GroupNorm + affine transform ( Time embedding * GN + Label embedding)

- Classifier guidance is a separate classifier that helps to generate images of a certain category.

Math Derivation

(2024-04-17)

- VAE and diffuseion model both follows MLE strategy to find the parameter corresponding to the desired data distribution.

- VAE solves the dataset distribution P(𝐗) by approximating ELBO;

- While diffusion model solves the dataset distribution P(𝐗) by minimizing the KL Divergence.

(2023-08-04)

VAE

-

VAE also wants to get the distribution of dataset P(𝐗), and an 𝐱 is generated by a latent variable 𝐳.

Therefore, based on Bayes theorem, p(𝐱) = p(𝐳) p(𝐱|𝐳) / p(𝐳|𝐱), where p(𝐳) is the prior.

And p(𝐳|𝐱) is intractable because in p(𝐳|𝐱) = p(𝐳)p(𝐱|𝐳) / p(𝐱), the p(𝐱) can’t be computed through ∫f(𝐱,𝐳)d𝐳 since 𝐳 is high dimensional continuous.

-

By introducing an approximated posterior q(𝐳|𝐱), log p(𝐱) = ELBO + KL-divergence.

$log p(𝐱) = E_{q(𝐳|𝐱)} log \frac{p(𝐱,𝐳)}{q(𝐳|𝐱)} + ∫ q(𝐳|𝐱) log \frac{q(𝐳|𝐱)}{p(𝐳)} d𝐳$

The KL-divergence can be integrated analytically.

ELBO is an expectation w.r.t q(𝐳|𝐱), which can technically be estimated using Monte Carlo sampling directly.

But when sampled q(𝐳ⁱ|𝐱) is around 0, the variance of $log\ q(𝐳|𝐱)$ would be high and make its gradint unstable, then cause the optimization difficult. And the function to be estimated $log(\frac{p_θ(𝐱,𝐳)}{q_φ(𝐳|𝐱)})$ involves two approximate models containing a lot error.

Thus, it’s not feasible to approximate ELBO directly.

-

To approximate ELBO, we analyse the generative model (Decoder) p(𝐱|𝐳).

Base on Bayes theorem, p(𝐱|𝐳) = p(𝐱) p(𝐳|𝐱)/p(𝐳).

By introducing the posterior approximation q(𝐳|𝐱), p(𝐱|𝐳) can derive: E(log p(𝐱|𝐳)) = ELBO + KL-divergence, i.e.,

$E_{q(𝐳|𝐱)}[ log p(𝐱|𝐳)] = E_{q(𝐳|𝐱)}[ log(\frac{p(𝐳|𝐱) p(𝐱)}{q(𝐳|𝐱)})] + ∫ q(𝐳|𝐱) log \frac{q(𝐳|𝐱)}{p(𝐳)} d𝐳$

Given 𝐳, the likelihood p(𝐱|𝐳) is supposed to be maximized. (The probiblity that the real 𝐱 is sampled should be maximum.)

Therefore, the parameters θ of generative model p(𝐱|𝐳) should be optimized via MLE (cross-entropy) loss.

-

Now since ELBO = E(log p(𝐱|𝐳)) - KL-divergence and KL-div is known, ELBO will be obtained by just computing E(log p(𝐱|𝐳)).

E(log p(𝐱|𝐳)) can be estimated by MC: sample a 𝐳 then compute log p(𝐱|𝐳), and repeat N times, take average.

The approximated E(log p(𝐱|𝐳)) should be close to the original 𝐱, so there is a MSE loss to optimize the parameters ϕ of the distribution of 𝐳.

- (2023-10-30) 𝐳’s distribution needs to be learned as well for sampling 𝐱.

But MC sampling is not differentiable, so ϕ cannot be optimized through gradient descent.

Therefore, reparameterization considers that 𝐳 comes from a differentiable determinstic transform of ε, a random noise, i.e., 𝐳 = μ + σε.

Then, parameters (μ, σ²) of 𝐳’s distribution (Encoder) will be optimized by MSE.

Forward process

-

The forward diffusion process is like the “Encoder” p(𝐳|𝐱) in VAE:

$$q(𝐳|𝐱) ⇒ q(𝐱ₜ | 𝐱ₜ₋₁)$$The distribution of image 𝐱ₜ at timestep t is determined by the image 𝐱ₜ₋₁ at the previous timestep, where smaller t means less noise.

Specifically, 𝐱ₜ follows a normal distribution with a mean of $\sqrt{1-βₜ}𝐱ₜ₋₁$ and a variance of $\sqrt{βₜ}𝐈$:

$$q(𝐱ₜ | 𝐱ₜ₋₁) = N(𝐱ₜ; \sqrt{1-βₜ} 𝐱ₜ₋₁, \sqrt{βₜ}𝐈)$$𝐱ₜ is similar to 𝐱ₜ₋₁ because its mean is around 𝐱ₜ₋₁.

-

An image 𝐱 is a “vector”, and each element of it is a pixel.

-

As timestep t increase, βₜ increases and (1-βₜ) decreases, which indicates the variance gets larger and the mean value gets smaller.

Intuitively, the value of the original pixel xₜ₋₁ is fading and more pixels become outliers resulting in a wider range of variation around the mean.

-

By introducing a notation $α = 1-βₜ$, the t-step evolution from 𝐱₀ to 𝐱ₜ can be simplied to a single expression instead of sampling t times iteratively.

-

Replace (1-βₜ) with α, the distribution becomes:

$q(𝐱ₜ | 𝐱ₜ₋₁) = N(𝐱ₜ; \sqrt{αₜ} 𝐱ₜ₋₁, (1-αₜ)𝐈)$

-

Based on the reparameterization trick, a sample from the distribution is:

$𝐱ₜ = \sqrt{αₜ} 𝐱ₜ₋₁ + \sqrt{1-αₜ} ε$

-

Similarly, $𝐱ₜ₋₁ = \sqrt{αₜ₋₁} 𝐱ₜ₋₂ + \sqrt{1-αₜ₋₁} ε$, and plug it into 𝐱ₜ.

-

Then $𝐱ₜ = \sqrt{αₜ₋₁} ( \sqrt{αₜ} 𝐱ₜ₋₂ + \sqrt{1-αₜ₋₁} ε ) + \sqrt{1-αₜ} ε$. Now, the mean becomes $\sqrt{αₜαₜ₋₁} 𝐱ₜ₋₂$

-

Given variance = 1 - (mean/𝐱)² in the above normal distribution $N(𝐱ₜ; \sqrt{αₜ} 𝐱ₜ₋₁, (1-αₜ)𝐈)$, and here mean = $\sqrt{αₜαₜ₋₁} 𝐱ₜ₋₂$,

the standard deviation should be $\sqrt{1 - αₜαₜ₋₁}$, then 𝐱ₜ becomes:

$𝐱ₜ = \sqrt{αₜαₜ₋₁} 𝐱ₜ₋₂ + \sqrt{1 - αₜαₜ₋₁} ε$

-

Repeatedly substituting intermediate states, the 𝐱ₜ can be represented with 𝐱₀ :

$𝐱ₜ = \sqrt{αₜαₜ₋₁ ... α₁} 𝐱₀ + \sqrt{1 - αₜαₜ₋₁ ... α₁} ε$

-

Denote the cumulative product “αₜαₜ₋₁ … α₁” as $\bar aₜ$, the 𝐱ₜ can be reached in one-shot.

$𝐱ₜ = \sqrt{\bar aₜ} 𝐱₀ + \sqrt{1 - \bar aₜ} ε$

-

The distribution of 𝐱ₜ given 𝐱₀ is:

$q(𝐱ₜ | 𝐱₀) = N(𝐱ₜ; \sqrt{\bar aₜ} 𝐱₀, (1 - \bar aₜ)𝐈)$

-

With this expression, the deterministic forward process is ready-to-use and only the reverse process needs to be learned by a network.

- That’s why in the formula below, they “reverse” the forward q(𝐱ₜ|𝐱ₜ₋₁) to q(𝐱ₜ₋₁|𝐱ₜ) resulting in the equation only containing “reverse process”: 𝐱ₜ₋₁|𝐱ₜ, which then can be learned by narrowing the gap between q(𝐱ₜ₋₁|𝐱ₜ) and p(𝐱ₜ₋₁|𝐱ₜ).

Reverse process

The reverse diffusion process is like the Decoder in VAE.

$$p(𝐱|𝐳) ⇒ p(𝐱ₜ₋₁𝐱ₜ₋₂..𝐱₀ | 𝐱ₜ)$$-

Given a noise image 𝐱ₜ, the distribution of less-noise image 𝐱ₜ₋₁ is

$p(𝐱ₜ₋₁ | 𝐱ₜ) = N(𝐱ₜ₋₁; μ_θ(𝐱ₜ, t), Σ_θ(𝐱ₜ, t))$

where the variance can be a fixed schedule as βₜ, so only the mean $μ_θ(𝐱ₜ, t)$ needs to be learned with a network.

VLB

VLB is the loss to be minimized. VLB gets simplied by:

-

Applying Bayes rule to “reverse” the direction of the forward process, which becomes “forward denoising” steps q(𝐱ₜ₋₁ | 𝐱ₜ), because it’s from a noise image to a less-noise image;

-

Adding extra conditioning on 𝐱₀ for each “forward denosing” step q(𝐱ₜ₋₁ | 𝐱ₜ, 𝐱₀).

Derivation by step:

-

Diffusion model wants a set of parameter 𝛉 letting the likelihood of the original image 𝐱₀ maximum.

$$\rm θ = arg max_θ\ log\ p_θ(𝐱₀)$$With adding a minus sign, the objective turns to find the minimum:

-log p(𝐱₀) = -ELBO - KL-divergence

$$ \begin{aligned} & -log p(𝐱₀) \left( = -log \frac{p(𝐱₀, 𝐳)}{p(𝐳|𝐱₀)} \right) \\\ &= -log \frac{p(𝐱_{1:T}, 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)} \\\ & \text{(Introduce "approximate posterior" q :)} \\\ &= -(log \frac{ p(𝐱_{1:T}, 𝐱₀) }{ q(𝐱_{1:T} | 𝐱₀)} \ + log (\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)}) ) \\\ \end{aligned} $$-

Note that $q(𝐱_{1:T} | 𝐱₀)$ represents a joint distribution of N conditional distributions 𝐱ₜ and 𝐱ₜ₋₁.

-

It is the step-by-step design that makes training a network to learn the data distribution possible. Meanwhile, the sampling process also has to be step-by-step.

Compute expection w.r.t. $q(𝐱_{1:T} | 𝐱₀)$ for both side.

$$ E_{q(𝐱_{1:T} | 𝐱₀)} [ -log p(𝐱₀) ] \\\ \ = E_{q(𝐱_{1:T} | 𝐱₀)} \left[-log \frac{ p(𝐱_{0:T}) }{ q(𝐱_{1:T} | 𝐱₀)}\right] \ + E_{q(𝐱_{1:T} | 𝐱₀)} \left[-log (\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)})\right] $$Expectation is equivalent to integration.

$$ \begin{aligned} & \text{LHS:} ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * (-log p(𝐱₀)) d𝐱_{1:T} = -log p(𝐱₀) \\\ & \text{RHS:} \ = E_{q(𝐱_{1:T} | 𝐱₀)} \left[-log \frac{ p(𝐱_{0:T}) }{ q(𝐱_{1:T} | 𝐱₀)}\right] \\\ & + ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * \left(-log (\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)})\right) d𝐱_{1:T} \end{aligned} $$ -

-

Since KL-divergence is non-negative, there is:

-log p(𝐱₀) ≤ -log p(𝐱₀) + KL-divergence =

$$ \begin{aligned} & -log p(𝐱₀) + D_{KL}( q(𝐱_{1:T} | 𝐱₀) || p(𝐱_{1:T} | 𝐱₀) ) \\\ &= -log p(𝐱₀) \ + ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * \left(log (\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)})\right) d𝐱_{1:T} \end{aligned} $$ -

Break apart the denominator $p(𝐱_{1:T} | 𝐱₀)$ of the argument in the KL-divergence’s logarithm based on Bayes rule:

$$p(𝐱_{1:T} | 𝐱₀) = \frac{p(𝐱_{1:T}, 𝐱₀)}{p(𝐱₀)} = \frac{p(𝐱_{0:T})}{p(𝐱₀)}$$Plug it back to KL-divergence:

$$ \begin{aligned} &∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * \left( log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{1:T} | 𝐱₀)})\right) d𝐱_{1:T} \\\ &= ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * log (\frac{q(𝐱_{1:T} | 𝐱₀) p(𝐱₀)}{p(𝐱_{0:T})}) d𝐱_{1:T} \\\ &= ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * [ log(p(𝐱₀) + log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{0:T})})] d𝐱_{1:T}\\\ &= ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * log(p(𝐱₀) d𝐱_{1:T} \\\ &\quad + ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{0:T})}) d𝐱_{1:T} \\\ &= log p(𝐱₀) + ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀) * log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{0:T})}) d𝐱_{1:T} \end{aligned} $$ -

Plug this decomposed KL-divergence into the above inequality, and the incomputable log-likelihood (-log p(𝐱₀)) can be canceled, resulting in the Variational Lower Bound (VLB):

$$-log p(𝐱₀) ≤ ∫_{𝐱_{1:T}} q(𝐱_{1:T} | 𝐱₀)\ log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_{0:T})}) d𝐱_{1:T}$$The argument of log is a ratio of the forward process and the reverse process.

The numerator is the distribution of $𝐱_{1:T}$ given the starting point 𝐱₀. To make the numerator and denominator have symmetric steps, the starting point of the reverse process $p(𝐱_T)$ can be separated out.

-

Separate out $p(𝐱_T)$ from the denominator by rewriting the conditional probability as a cumulative product:

$$ p(𝐱_{0:T}) = p(𝐱_T) Π_{t=1}^T p(𝐱ₜ₋₁|𝐱ₜ) $$Plug it back into the logarithm of the VLB, and break the numerator joint distribution as a product of N-1 steps as well:

$$ log(\frac{q(𝐱_{1:T} | 𝐱₀)}{p(𝐱_T) Π_{t=1}^T p(𝐱ₜ₋₁|𝐱ₜ)}) \= log \frac{ Π_{t=1}^T q(𝐱ₜ|𝐱ₜ₋₁)}{ p(𝐱_T) Π_{t=1}^T p(𝐱ₜ₋₁|𝐱ₜ)} \\\ \= log \frac{ Π_{t=1}^T q(𝐱ₜ|𝐱ₜ₋₁)}{ Π_{t=1}^T p(𝐱ₜ₋₁|𝐱ₜ)} - log p(𝐱_T) \\\ \= ∑_{t=1}^T log (\frac{q(𝐱ₜ|𝐱ₜ₋₁)}{p(𝐱ₜ₋₁|𝐱ₜ)}) - log\ p(𝐱_T) $$This form includes every step rather than only focusing on the distribution of the all events $𝐱_{1:T}$.

(2023-08-11) DM wants the data distribution, but it doesn’t rebuild the distribution transformation directly from Gaussian to data distribution, but approachs the corruption process step-by-step to reduce the difficulty (variance).

-

Separate the first item (first step, t=1) from the summation, so that the other terms can be conditioned on 𝐱₀, thus reducing the variance:

$$ log \frac{q(𝐱₁|𝐱₀)}{p(𝐱₀|𝐱₁)} + ∑_{t=2}^T log (\frac{q(𝐱ₜ|𝐱ₜ₋₁)}{p(𝐱ₜ₋₁|𝐱ₜ)}) - log\ p(𝐱_T) $$ -

Reformulate the numerator $q(𝐱ₜ|𝐱ₜ₋₁)$ based on Bayes rule:

$$ q(𝐱ₜ|𝐱ₜ₋₁) = \frac{q(𝐱ₜ₋₁|𝐱ₜ)q(𝐱ₜ)}{q(𝐱ₜ₋₁)} $$In this form, forward adding noise $q$ and reverse denoising $p$ become the same process from 𝐱ₜ to 𝐱ₜ₋₁. Such that, in one pass, the model can both perform forward process and reverse process once.

-

Make each step conditioned on 𝐱₀ to reduce the variance (uncertainty).

$$ q(𝐱ₜ|𝐱ₜ₋₁) = \frac{q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)q(𝐱ₜ| 𝐱₀)}{q(𝐱ₜ₋₁| 𝐱₀)} $$And this distribution $q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)$ has a closed-form solution.

Here is why the first step is separated out: If t=1, the $q(𝐱₁|𝐱₀)$ conditioned on 𝐱₀ is:

$$ q(𝐱₁|𝐱₀) = \frac{q(𝐱₀|𝐱₁, 𝐱₀)q(𝐱₁|𝐱₀)}{q(𝐱₀|𝐱₀)} $$There is a loop of $q(𝐱₁|𝐱₀)$ if 𝐱₀ exists, and other terms $q(𝐱₀|𝐱₁, 𝐱₀)$ and $q(𝐱₀|𝐱₀)$ don’t make sense.

Plug the newly conditioned numerator back to the fraction, and break it apart based on log rule:

$$ ∑_{t=2}^T log \frac{q(𝐱ₜ|𝐱ₜ₋₁)}{p(𝐱ₜ₋₁|𝐱ₜ)} \ = ∑_{t=2}^T log \frac{q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)q(𝐱ₜ| 𝐱₀)}{p(𝐱ₜ₋₁|𝐱ₜ)q(𝐱ₜ₋₁| 𝐱₀)} \\\ \ = ∑_{t=2}^T log \frac{q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)}{p(𝐱ₜ₋₁|𝐱ₜ)} + ∑_{t=2}^T log \frac{q(𝐱ₜ| 𝐱₀)}{q(𝐱ₜ₋₁| 𝐱₀)} \\\ $$The second term will be simplied to $log \frac{q(𝐱_T| 𝐱₀)}{q(𝐱₁| 𝐱₀)}$

Then, the variational lower bound becomes:

$$ D_{KL}(q(𝐱_{1:T}|𝐱₀) || p(𝐱_{0:T})) = \\\ log \frac{q(𝐱₁|𝐱₀)}{p(𝐱₀|𝐱₁)} \ + ∑_{t=2}^T log \frac{q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)}{p(𝐱ₜ₋₁|𝐱ₜ)} \ + log \frac{q(𝐱_T| 𝐱₀)}{q(𝐱₁| 𝐱₀)} \ - log\ p(𝐱_T) \\\ \ \\\ \ = ∑_{t=2}^Tlog \frac{ q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) }{ p(𝐱ₜ₋₁|𝐱ₜ)} \ + log \frac{q(𝐱_T| 𝐱₀)}{p(𝐱₀|𝐱₁)} \ - log\ p(𝐱_T) \\\ \ \\\ \ = ∑_{t=2}^T log \frac{ q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) }{ p(𝐱ₜ₋₁|𝐱ₜ)} \ + log \frac{q(𝐱_T| 𝐱₀)}{p(𝐱_T)} \ - log p(𝐱₀|𝐱₁) $$Write this formula as KL-divergence, so that a concrete expression can be determined later.

How are those two fractions written as KL-divergence? $$ \begin{aligned} & ∑_{t=2}^T D_{KL} (q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) || p(𝐱ₜ₋₁|𝐱ₜ)) \\\ & + D_{KL} (q(𝐱_T| 𝐱₀) || p(𝐱_T)) \\\ & - log\ p(𝐱₀|𝐱₁) \end{aligned} $$

Loss function

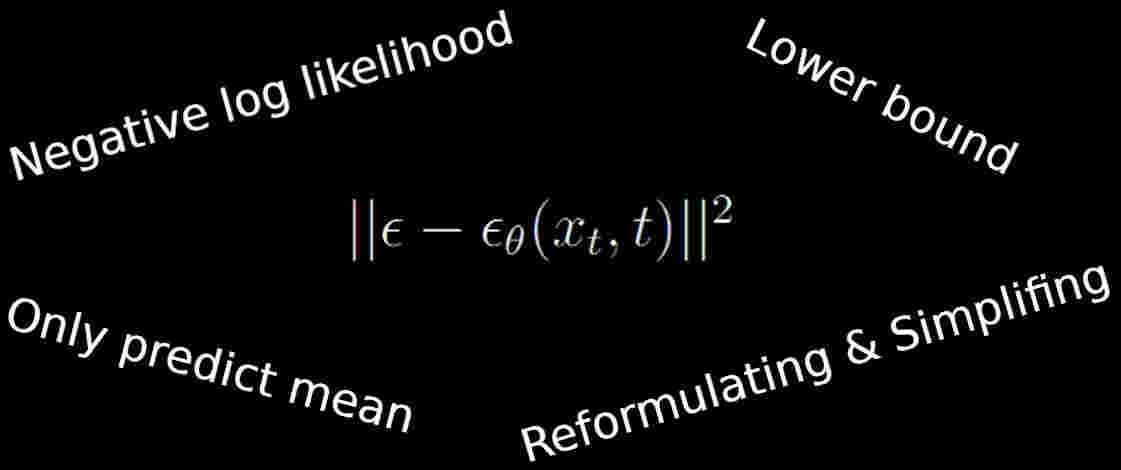

The VLB to be minimized is eventually derived as a MSE loss function between the actual noise and the predicted noise.

-

$D_{KL} (q(𝐱_T| 𝐱₀) || p(𝐱_T))$ can be ignored.

- $q(𝐱_T| 𝐱₀)$ has no learnable parameters because it just adds noise following a schedule.

- And $p(𝐱_T)$ is the noise image sampled from normal distribution. Since $q(𝐱_T| 𝐱₀)$ is the eventual image which is supposed to follow the normal distribution, this KL-divergence should be small.

Then, the loss only contains the other two terms:

$$L = ∑_{t=2}^T D_{KL} (q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) || p(𝐱ₜ₋₁|𝐱ₜ)) - log\ p(𝐱₀|𝐱₁)$$ -

$D_{KL} (q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) || p(𝐱ₜ₋₁|𝐱ₜ))$ is the MSE between the actual noise and the predicted noise.

-

For the reverse pass, the distribution of the denoised image $p(𝐱ₜ₋₁|𝐱ₜ)$ has a parametric expression:

$$p(𝐱ₜ₋₁|𝐱ₜ) = N(𝐱ₜ₋₁; μ_θ(𝐱ₜ,t), Σ_θ(𝐱ₜ,t)) \\\ = N(𝐱ₜ₋₁; μ_θ(𝐱ₜ,t), β𝐈)$$where Σ is fixed as βₜ𝐈, and only the mean $μ_θ(𝐱ₜ,t)$ will be learned and represented by a network (output) through the MSE loss of noise as below.

-

For the (“reversed”) forward pass, the distribution of noise-added image $q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀)$ has a closed-form solution, which can be written as a similar expression as p(𝐱ₜ₋₁|𝐱ₜ): What’s the derivation?

$$ q(𝐱ₜ₋₁|𝐱ₜ, 𝐱₀) = N(𝐱ₜ₋₁; \tilde μₜ(𝐱ₜ,𝐱₀), \tilde βₜ𝐈) \\\ \ \\\ \tilde βₜ = \frac{1- \bar αₜ₋₁}{1-\bar αₜ} ⋅ βₜ \\\ \ \\\ \tilde μ(𝐱ₜ,𝐱₀) = \frac{\sqrt{αₜ} (1-\bar αₜ₋₁) }{1-\bar αₜ} 𝐱ₜ \ + \frac{\sqrt{\bar αₜ₋₁} βₜ}{1-\bar αₜ} 𝐱₀ \\\ \ \\\ \rm Is there \sqrt{αₜ} or \sqrt{\bar αₜ} ? $$where the $\tilde βₜ$ is fixed, so only consider the $\tilde μ(𝐱ₜ,𝐱₀)$, which can be simplified by the one-step forward process expression: $𝐱ₜ = \sqrt{\bar αₜ} 𝐱₀ + \sqrt{1 - \bar αₜ} ε$

$$ 𝐱₀ = \frac{𝐱ₜ - \sqrt{1 - \bar αₜ} ε}{\sqrt{\bar αₜ}} $$Plug 𝐱₀ into $\tilde μ(𝐱ₜ,𝐱₀)$, then the mean of the noise-added image doesn’t depend on 𝐱₀ anymore:

$$ \begin{aligned} \tilde μ(𝐱ₜ,𝐱₀) & = \frac{\sqrt{αₜ} (1-\bar αₜ₋₁) }{1-\bar αₜ} 𝐱ₜ \ + \frac{\sqrt{\bar αₜ₋₁} βₜ}{1-\bar αₜ} \ \frac{𝐱ₜ - \sqrt{1 - \bar αₜ} ε}{\sqrt{\bar αₜ}} \\\ \ \\\ & = ???\ How \ to \ do? \ ??? \\\ & = \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε) \end{aligned} $$The mean of the distribution from which the noise-added image (𝐱ₜ,𝐱₀) at timestep t get sampled out is subtracting some random noise from image 𝐱ₜ.

𝐱ₜ is known from the forward process schedule, and the $\tilde μ(𝐱ₜ,𝐱₀)$ is the target for the network to optimize weights to make the predicted mean $μ_θ(𝐱ₜ,t)$ same as $\tilde μ(𝐱ₜ,𝐱₀)$.

-

Since network only output μ, the KL-divergence in the loss function can be simplified in favor of using MSE:

$$ Lₜ = \frac{1}{2σₜ²} \\| \tilde μ(𝐱ₜ,𝐱₀) - μ_θ(𝐱ₜ,t) \\|² $$This MSE indicates that the noise-added image in the forward process and the noise-removed image in the reverse process should be as close as possible.

Since the actual mean $\tilde μ(𝐱ₜ,𝐱₀) = \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε)$, where 𝐱ₜ is known, as it’s the input to the network. So the model is essentially estimating the actual $ε$ (random noise) every time.

Hence, the predicted mean $μ_θ(𝐱ₜ,t)$ by the model can be written in the same form as $\tilde μ(𝐱ₜ,𝐱₀)$, where only the noise $ε_θ$ has parameters:

$$μ_θ(𝐱ₜ,t) = \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ((𝐱ₜ,t)))$$Therefore, the loss term becomes:

$$ Lₜ = \frac{1}{2σₜ²} \\| \tilde μ(𝐱ₜ,𝐱₀) - μ_θ(𝐱ₜ,t) \\|² \\\ \ = \frac{1}{2σₜ²} \left\\| \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε) \ - \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t)) \right\\|² \\\ \ = \frac{βₜ²}{2σₜ² αₜ (1-\bar αₜ)} \\|ε - ε_θ(𝐱ₜ,t) \\|² $$Disregarding the scaling factor can bring better sampling quality and easier implementation, so the final loss for the KL-divergence is MSE between actual noise and predicted noise at time t:

$$\\|ε - ε_θ(𝐱ₜ,t) \\|²$$ -

Once the mean $μ_θ(𝐱ₜ,t)$ has predicted out based on 𝐱ₜ and t, a “cleaner” image can be sampled from the distribution:

$$ N(𝐱ₜ₋₁; μ_θ(𝐱ₜ,t), \sigma_θ(𝐱ₜ,t)) = N(𝐱ₜ₋₁; \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t), βₜ𝐈) $$ By using reparameterization trick, this sampled image is: $$ 𝐱ₜ₋₁ = μ_θ(𝐱ₜ,t) + σε \ = \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t) + \sqrt{βₜ}ε $$

-

-

The last term $log p(𝐱₀|𝐱₁)$ in the VLB is the predicted distribution for the original image 𝐱₀. Its goodness is measured by a probability that the original image $𝐱₀$ gets sampled from the estimated distribution $N(x; μ_θⁱ(𝐱₁,1), β₁)$.

The probability of an image should be a product of total D pixels. And the probability a pixel should be an integral over an interval [δ₋, δ₊] of the PDF curve:

$$ p_θ(𝐱₀|𝐱₁) = ∏_{i=1}^D ∫_{δ₋(x₀ⁱ)}^{δ₊(x₀ⁱ)} N(x; μ_θⁱ(𝐱₁,1), β₁) dx $$- where $x₀$ is the pixel’s ground-truth.

- $N(x; μ_θⁱ(𝐱₁,1), β₁)$ is the distribution to be integrated.

This interval is determined based on the actual pixel value as:

$$ δ₊(x) = \begin{cases} ∞ & \text{if x = 1} \\\ x+\frac{1}{255} & \text{if x < 1} \end{cases}, \quad δ₋(x) = \begin{cases} -∞ & \text{if x = -1} \\\ x-\frac{1}{255} & \text{if x > -1} \end{cases} $$-

The original pixel range [0,255] has been normalized to [-1, 1] to align with the standard normal distribution $p(x_T) \sim N(0,1)$

-

If the actual value is 1, the integral upper bound in the distribution is ∞, and the lower bound is 1-1/255 = 0.996, the width of the interval is from 0.996 to infinity.

If the actual value is 0.5, the upper bound is 0.5+1/255, and the lower bound is 0.5-1/255, the width of the interval is 2/255.

pic: area of the true pixel region in two predicted distributions.

If the area around the actual pixel value under the predicted distribution PDF curve is large, the predicted distribution is good. Howerver, if the area around real pixel value is small, the estimated mean is wrongly located.

Hence, this probability (log-likelihood) should be maximized, and by condering the minus sign in front of it, the corresponding loss term comes.

However, the authors got rid of this loss term $-log p(𝐱₀|𝐱₁)$ when training the network. And the consequense is at inference time, the final step from 𝐱₁ to 𝐱₀ doesn’t add noise, because this step wasn’t get optimized. Therefore, The difference from other sampling steps is that the predicted 𝐱₀ doesn’t plus random noise.

$$ \begin{aligned} \text{t>1:}\quad 𝐱_{t-1} &= \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t) + \sqrt βₜ ε) \\\ \text{t=1:}\quad 𝐱_{t-1} &= \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{βₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t)) \end{aligned} $$A simple reason is that we don’t want to add noise to the final denoised clear output image 𝐱₀. Otherwise, the generated image is low-quality.

The complete loss function is MSE:

$$ \begin{aligned} \rm L_{simple} &= E_{t,𝐱₀,ε} [ || ε - ε_θ(𝐱ₜ,t)||² ] \\\ &= E_{t,𝐱₀,ε} [ || ε - ε_θ( \sqrt{\bar aₜ} 𝐱₀ + \sqrt{1 - \bar aₜ} ε, t) ||² ] \end{aligned} $$- t is sampled from a uniform distribution between 1 and t;

- 𝐱ₜ is the one-step forward process.

Algorithms

DDPM paper

Training a model:

\begin{algorithm}

\caption{Training}

\begin{algorithmic}

\REPEAT

\STATE Sample a t from U(0,T)

\STATE Select an input image 𝐱₀ from dataset

\STATE Sample a noise from N(0,𝐈)

\STATE Perform gradient descent with loss: \\\\

$||ε - ε_θ(\sqrt{\bar aₜ} 𝐱₀ + \sqrt{1 - \bar aₜ} ε, t)||²$

\UNTIL{converge}

\end{algorithmic}

\end{algorithm}

Sampling from the learned data distribution by means of reparameterization trick:

\begin{algorithm}

\caption{Sampling}

\begin{algorithmic}

\STATE Sample a noise image $𝐱_T \sim N(0,𝐈)$

\FOR{t = T:1}

\COMMENT{Remove noise step-by-step}

\IF{t=1}

\STATE ε=0

\ELSE

\STATE ε ~ N(0,𝐈)

\ENDIF

\STATE $𝐱ₜ₋₁ = \frac{1}{\sqrt{αₜ}} (𝐱ₜ - \frac{1-αₜ}{\sqrt{1 - \bar αₜ}} ε_θ(𝐱ₜ,t) + \sqrt{σₜ}ε$

\COMMENT{Reparam trick}

\ENDFOR

\RETURN 𝐱₀

\end{algorithmic}

\end{algorithm}

In this reparametrization formula change βₜ and $\sqrt{βₜ}$ to 1-αₜ and σₜ, which are different from the above equation.

Training and Sampling share the common pipeline:

Improvements

Improvements from OpenAI’s 2021 papers.

-

Learn a scale factor for interpolating the upper and lower bound to get a flexible variance:

$$Σ_θ(xₜ,t) = exp(v\ log βₜ +(1-v)\ log(1- \tilde{βₜ}))$$v is learned by adding an extra loss term $λ L_{VLB}$, and λ=0.001.

$$L_{hybrid} = E_{t,𝐱₀,ε} [ || ε - ε_θ(𝐱ₜ,t)||² ] + λ L_{VLB}$$ -

Use cosine noise schedule $f(t)=cos(\frac{t/T+s}{1+s}⋅π/2)²$ in favor of linear schedule.